Model behavior: How Metaplane's ML model sees what others miss

Data observability tools all make similar promises about how their data monitoring works. "Detect anomalies! Reduce noise-y alerts!" The underlying models powering these features, though, are anything but similar. Read on to learn about how we approach modeling here at Metaplane, and how it's made all the difference for our customers.

You’d be forgiven for thinking that machine learning models are more or less the same. In fact, many of them are. A lot of the machine learning you interact with is probably powered by the same underlying models—maybe with a few tweaks.

At Metaplane, we've taken a fundamentally different approach to building our data observability solution—one that's purpose-built for the unique patterns and challenges of data systems.

Let's pull back the curtain on what makes our ML model different, why it matters, and how it translates to a better experience for your team.

Beyond off-the-shelf: Why generic models fall short for data purposes

Most data observability tools rely on general-purpose time series models that weren't designed with data observability in mind. These off-the-shelf solutions (like Facebook's Prophet, for example) are built for forecasting, not anomaly detection.

The problem: These models prioritize minimizing forecast error rather than correctly identifying true anomalies. They're optimized for the wrong objective.

When we started building Metaplane, we initially used these same models. But we quickly discovered their limitations:

- They misinterpret trends where none exist

- They adapt too slowly to legitimate changes in data patterns

- They generate predictions that make little sense in the context of data systems

Off-the-shelf models tend to bombard you with way too many alerts—and most of them are inaccurate. We knew that if we wanted Metaplane’s monitors to truly solve the problem of anomaly detection, we had to build something better.

The Metaplane approach: A model purpose-built for data observability

Rather than forcing generic algorithms to work for data observability, we built our own bespoke ML model designed specifically for the patterns we see in data systems.

1. Pattern-specific modeling

Consider a row count monitor that resembles a staircase pattern. Rather than treating this as a generic time series, we model:

- What size the steps should be

- How long they should last

- When updates should occur

This approach allows for fine-grained precision in detecting issues, such as:

- Missing updates during expected update windows

- Increases that are smaller than historically observed patterns

2. Distribution-aware monitoring

Unlike general-purpose models that assume normal distributions around forecasts, we recognize that data metrics often follow specific non-normal patterns:

- Row counts in tables that should never decrease

- Step-function changes when batches are processed

- Mean shifts that indicate legitimate business changes

We built our models to understand these specific dynamics rather than forcing them into standard statistical frameworks.

Faster, more responsive learning

Beyond more accurate alerts, building our own model also gave us a big performance boost. How? We retrain our model after every new observation.

While this might seem like a small detail, it's transformative for accuracy. Instead of retraining models daily or weekly (as competitors do), we continuously update our understanding of your data. This means:

- Faster adaptation to evolving patterns

- More accurate anomaly detection right after legitimate changes

- Better handling of seasonal and cyclical patterns

Metaplane has transformed how we operate—especially the GROUP BY monitors. They catch even the smallest variations in our data, I can finally rest easy knowing every source of every size is covered.

— Nicole Dallar-Malburg, Senior Analytics Engineer at Bluecore

Keeping humans in the loop

One of the most significant differences in our approach to modeling is a philosophical one: we believe monitoring should be a collaborative process between human expertise and machine learning. Here’s how this plays out practically:

The human-ML partnership

Our approach centers on the idea that you should be able to tell the model what matters to you:

- Annotations: When we alert you about something unusual, you can mark it as normal, and we'll never alert you on that pattern again.

- Configuration options: Extensive settings let you tailor each monitor to your specific metric and business context.

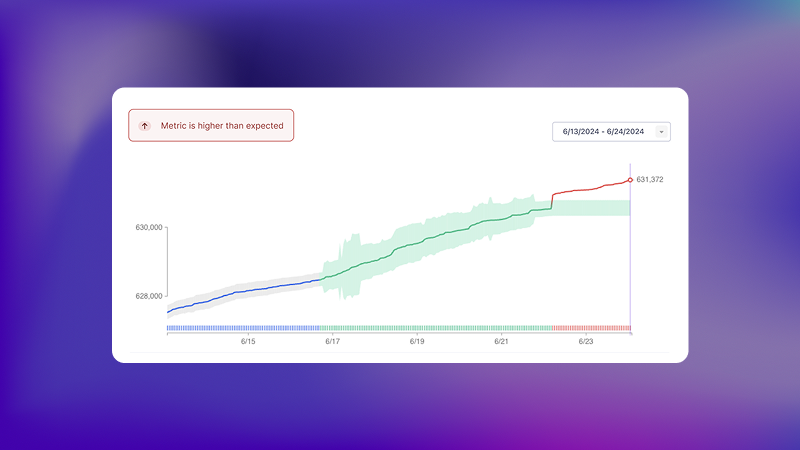

- Alert persistence: Unlike competitors, when we detect an anomaly, we keep the alert active until the metric returns to normal, or until you tell us that you expected this behavior.

- No anomaly incorporation: We don't automatically incorporate anomalies into our baseline—preventing the "normalization of deviance" that plagues other systems.

This human-in-the-loop process creates a virtuous cycle where the model continuously improves based on your feedback, increasingly aligning with what matters to your business.

The UX of a purpose-built model

What does all this technical sophistication mean for you as a user? A fundamentally better experience:

1. Smarter alerts

No more alert fatigue from the same false positives repeatedly triggering. When you mark something as normal, we learn from it permanently.

- Competitor approach: The same benign spike triggers alerts week after week

- Metaplane approach: You annotate once, and we never bother you about that pattern again

With Metaplane, more than 80% of alerts are legitimate issues that require my attention. The product speaks for itself. If something breaks, you'll know.

— Marion Pavillet, Senior Analytics Engineer at Mux

2. Persistent visibility of issues

We won't automatically resolve an alert just because the system adapted to the new normal. If data suddenly drops and stays down, you'll know about it until it's resolved or explicitly acknowledged.

3. Customization without complexity

Extensive configuration options let you define what matters:

- Set minimum bounds

- Configure upper-bound-only alerts

- Customize thresholds to your specific needs

4. Always-current models

Since we retrain continually, our models stay relevant even as your data evolves. This means fewer false positives after legitimate business changes and faster detection of new issues.

Overall, by leveraging Metaplane’s custom-built model, you can expect to experience these real-world benefits:

- Reduced alert fatigue: Focus on genuine issues instead of repetitive noise

- Faster issue resolution: More accurate alerts mean less time investigating false positives

- Better coverage: Detect subtle issues that generic models would miss

- True partnership: A system that learns from your expertise rather than ignoring it

Beyond the algorithm

While we're proud of our approach, Metaplane's value goes beyond just having a purpose-built algorithm. We've built a complete observability platform that adapts to your unique data environment and empowers your team to maintain data reliability.

Our goal isn't just to send alerts—it's to create a collaborative system where human expertise and machine learning work together to ensure your data is reliable, trustworthy, and delivering value to your organization.

Want to try it out? Request a demo today and see how Metaplane's purpose-built approach can transform your data observability.

Table of contents

Tags

...

...