Under the hood: How to build custom dbt alerting infrastructure

Last week, we announced our new dbt alerting tool—a free, standalone tool for all dbt users who need more context-rich alerts. In this post, we’ll take a peek behind the scenes at how the tool actually works.

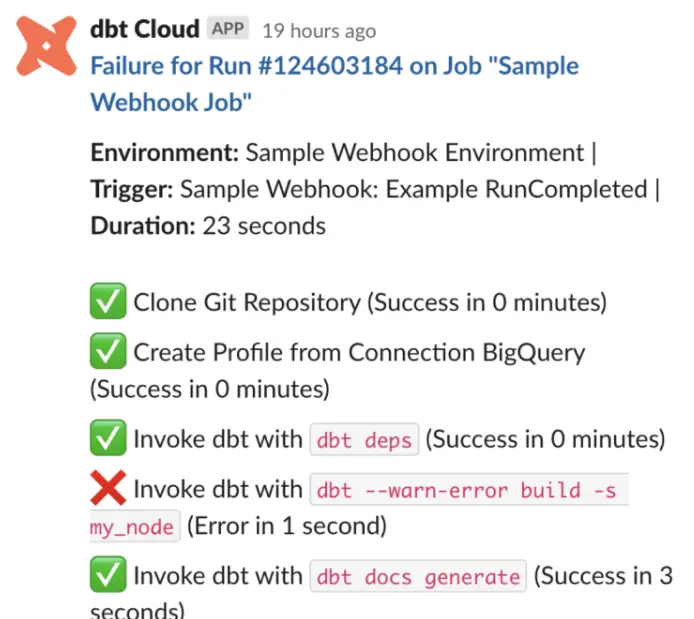

We were beyond excited to release our free dbt alerting tool last week. Having felt the pain of native dbt alerts not fitting the needs of our team, we built infrastructure that enabled more actionable and configurable alerts to be sent to our Slack channels, configured within our existing dbt yaml. And we made it a free tool so that any one else looking for better dbt alerting could reap the benefits as well.

While announcing the tool last week, we gave context into why we built our own version of dbt alerts—more granularity, richer context, and better routing being the main drivers.

But for this article, we wanted to peel back the curtain and get a little bit more technical about how it actually works and some of the interesting challenges we faced along the way.

Let's take a deep dive into how our dbt alerting tool operates under the hood.

The foundation: dbt artifacts

Our alerting tool's core functionality revolves around two key artifacts produced by every `dbt run` or `dbt build` command:

1. manifest.json: This file contains definitions and metadata about all resources (i.e. nodes) in the dbt project, including models, tests, seeds, sources, and exposures.

2. run_result.json: This file provides specific information about the dbt resources executed as part of the command.

These artifacts serve as the foundation for our alerting system, allowing us to extract crucial information about the dbt project structure and execution results.

Configuring alerts: The power of exposures

One of our primary goals when building this tool was to allow users to configure more granular alert rules within their dbt projects. We realized, though, that there wasn’t a simple way to include custom metadata at the top level of a dbt project. We wanted to support as many dbt setups as possible, so it was important that any top-level metadata we wrote would eventually wind up in the `manifest.json` file.

To achieve this without modifying dbt's core functionality, we leveraged dbt exposures. By using a specially named exposure that we knew to look for to store custom configuration data, we were able to:

- Define top-level rules for alert routing.

- Avoid users having to manually update the `meta` property of each model they wanted to have an alert for.

- Support various dbt setups without requiring direct access to the underlying git repository.

Alert rule structure

Now that we had a place for top-level rules, it was time to define what those rules should look like. Each alert rule in our system consists of two main components:

1. Filters: A list of conditions used to match nodes in the dbt project. These can include:

- Database path matching

- Tag-based matching

- Owner-based matching

2. Destinations: A list of alert destinations (e.g., Slack, Microsoft Teams, email) where notifications should be sent for matching nodes.

Now that the main configuration is covered, we can put all the pieces together to see how we take the configuration in the manifest.json and parse out relevant alerts for a dbt run.

The alerting process

Once connected, Metaplane will automatically listen for any new dbt job run and parse the associated alert rules from the manifest.json nodes we receive.

When a dbt job runs, our system follows these steps to generate and send alerts:

1. Configuration parsing: We extract the alert rules from the manifest.json file, focusing on our specially named exposure.

2. Rule application: The system applies the defined rules to the run_result.json file, creating a map of alert rules to matching node runs.

3. Data merging: We combine relevant data from both manifest.json and run_result.json to provide comprehensive context for each failed node.

4. Alert generation: Using the merged data, we create detailed alerts that include:

- Exact failure messages

- Failure counts

- Test names and types

- Affected tables or columns

5. Alert distribution: Finally, we package the alert information and send it to the specified destinations using their respective APIs.

Handling model-test relationships

One challenge we tackled was how to handle the one-to-many relationship between models and their associated tests. Specifically, a “parent” dbt model can have multiple dbt tests as “children,” and rules can match both models and tests. So if a test fails, should we send an alert on the test, the parent model, or both?

For example, let's say that the model `salesforce_orders` has a `not_null` check on the `order_id` column. If our alert rule was specifically for Salesforce models, the question is whether an alert should be sent for both the test and the model.

We decided that we would want to send out an alert for the test failure since that test directly tests the model. In practice, this means that whenever we see a test failure, we evaluate the rule against both the test itself and the actual models that the test is being applied to. If any of those match, we will send an alert.

Providing context-rich alerts

At the heart of our decision to build this tool was the idea of providing more context for each dbt alert so that someone could diagnose what went wrong directly from the alert itself instead of having to pour over docs.

To make our alerts truly actionable, we implemented two key features:

1. Easy access to test failures: We created a macro (GitHub repo) that downloads failing rows to a Snowflake stage and attaches the link to dbt run results. This allows users to access and analyze the data causing test failures quickly.

2. Comprehensive metadata: Each alert includes detailed information about the failure, such as the specific models or tests that failed, full error messages, and the number of failing records.

The result: a powerful, free tool for all dbt users

This was an incredibly fun and rewarding project to work on. Figuring out how to extract the information we needed from dbt was challenging, but worth it for more granular, context-rich alerts and more precise routing. As we continue to refine and expand our dbt alerting tool, we're excited to see how different data teams leverage it.

Head here to set up your own dbt alerts. You’ll probably want to check out the docs, too.

Or, if you want to start downloading only test failure results, we open-sourced our macro so you can use it in your own project.

Table of contents

Tags

...

...