Best data quality tools in 2025 (and how to pick the right one)

Data quality is becoming more important than ever, and it's important to have the right tools in your stack to keep up. Here's a list of the top data quality tools for your team in 2025.

According to a report from IBM, the number one challenge for generative AI adoption in 2025 is data quality.

Everything that data teams do hinges on the assumption that data quality is in good shape—but that doesn't magically happen. It takes work, and it takes the right data quality tools.

In this guide, we'll cut through the marketing hype and break down different types of data quality tools, what they do, and how to pick the right one for your specific data challenges.

What is data quality?

Before we dive deep into data quality tools, let's establish a quick baseline understanding of what data quality is.

- Data quality is the degree to which data serves an external use case or conforms to an internal standard.

- A data quality issue occurs when the data no longer serves the intended use case or meets the internal standard.

- A data quality incident is an event that decreases the degree to which data satisfies an external use case or internal standard.

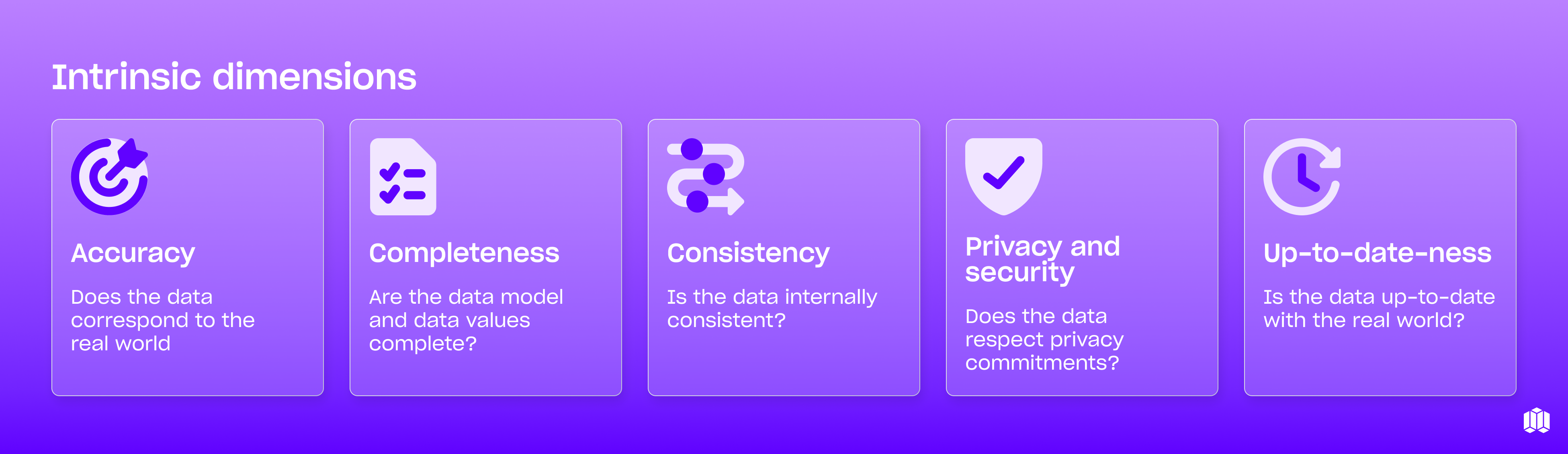

There are ten dimensions of data quality, spread across two different categories. First, there are intrinsic dimensions, which are independent of use case. Those dimensions are:

- Accuracy: Does the data correspond to the real world?

- Completeness: Are the data models and values complete?

- Consistency: Is the data internally consistent?

- Privacy & security: Does the data respect privacy commitments?

- Up-to-dateness: Is the data up-to-date with the real world?

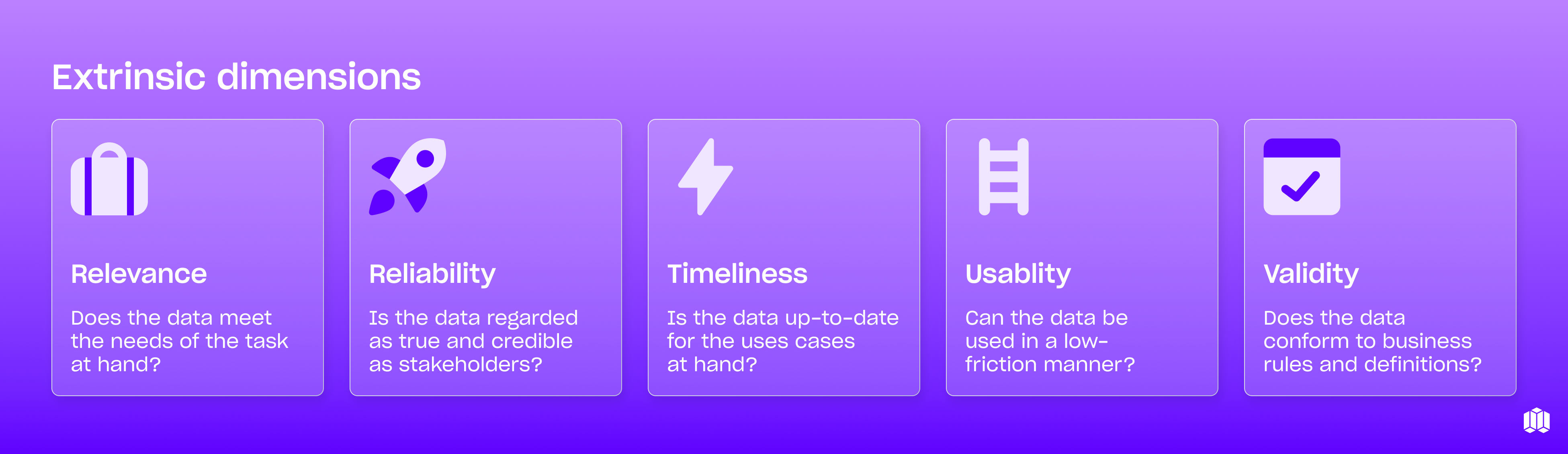

Then, there are the extrinsic dimensions of data quality that are more dependent on how you're actually using the data. Those dimensions are:

- Relevance: Does the data meet the needs of the task at hand?

- Reliability: Is the data true and credible?

- Timeliness: Is the data up-to-date for the use case?

- Usability: Can the data be used in a low-friction manner?

- Validity: Does the data conform to business rules and definitions?

How do you measure and improve your data quality

Improving data quality isn’t a one-and-done project—it’s an ongoing, always evolving process. Here’s how successful organizations approach it:

- Define data quality metrics specific to your business context What makes data “good enough” depends entirely on how you’re using it. Define what that looks like for each use case.

- Establish baselines and targets You can’t improve what you don’t measure. Start by understanding your current quality levels, then set improvement goals.

- Implement quality controls at multiple points Don’t wait until data reaches dashboards to check quality. Build controls into collection forms, ETL processes, and transformation logic.

- Automate monitoring and testing. Manual quality checks don’t scale. Use automated data quality tools to continuously validate data against defined expectations.

- Create clear ownership and accountability. Define owners for key domains so you know who's working on data quality issues when they arise.

Pro tip: Start small with your most critical data assets. It’s better to ensure perfect quality for the dashboards your CEO actually looks at than to boil the ocean.

What's a data quality tool?

Data quality tools are specialized software solutions designed to help you identify, monitor, and address data quality issues throughout your data pipeline. Think of them as the guardrails keeping your data on track.

Unlike general-purpose data tools (like your average ETL platform), data quality tools are specifically built to enforce quality standards, detect anomalies, and provide visibility into the health of your data assets.

The best data quality tools don't just tell you something's wrong—they help you understand why it's happening and how to fix it. They transform data quality from a reactive fire drill into a proactive, manageable process.

These tools typically provide capabilities like:

- Automated profiling to understand data characteristics

- Rule-based validation to enforce quality standards

- Anomaly detection to catch unexpected changes

- Alerting to notify teams when issues arise

- Reporting to track quality metrics over time

Most importantly, good data quality tools integrate seamlessly into your existing workflows—whether that's your dbt process, your CI/CD pipeline, or your dashboard refresh schedule.

Types of data quality tools

Not all data quality tools are created equal. Each category addresses different aspects of the quality challenge:

Data quality software plays a crucial role in enhancing decision-making and operational efficiency by providing accurate and reliable data.

Best data quality tools at a glance

Data discovery tools

What is data discovery?

Data management and discovery tools help you understand what data assets exist across your organization, where they’re located, and how they’re structured.

Good data discovery tools will automatically scan your databases, data warehouses, and data lakes to identify tables, columns, relationships, and even sensitive data. They create a searchable inventory that makes it easier for data professionals and business users alike to find, understand, and use data effectively.

Key capabilities include:

- Automated metadata extraction

- Data classification and tagging

- Relationship mapping

- Searchable data catalogs

- Business glossary integration

Data discovery isn't a one-time inventory of your data assets. It's about maintaining an up-to-date understanding of your data pipeline now and as it evolves.

It's also not the same as data profiling (though they're related). While discovery focuses on finding and cataloging data assets, profiling digs deeper into the characteristics and quality of that data.

Best data discovery tools

1. Atlan

Atlan has emerged as a leader in the data discovery space, offering a collaborative platform that combines powerful technical capabilities with an easy user experience. What sets Atlan apart is its "activate metadata" approach that turns passive documentation into actionable intelligence.

Key strengths:

- AI-powered context building that connects related assets automatically

- Slack-like collaboration features including comments and conversations

- Universal search across your entire data ecosystem

- Rich lineage visualization that makes impact analysis intuitive

- Pre-built integrations with 80+ tools in the modern data stack

Atlan particularly shines in organizations embracing modern data mesh or data product approaches. Its collaborative features break down silos between technical and business teams, while its open APIs allow it to evolve with your data ecosystem. For teams drowning in disconnected data assets, Atlan's ability to create a unified data home can be transformative.

2. Amundsen

For organizations looking for an open-source alternative, Amundsen (developed by Lyft) offers an impressive set of data discovery capabilities. Built to solve Lyft's own data discovery challenges, Amundsen has gained traction in the community for its intuitive interface and scalable architecture.

Key strengths:

- Open-source flexibility and customizability

- Graph-based metadata store for rich relationship mapping

- Strong search capabilities powered by Elasticsearch

- Active community development

- Integration with popular data tools like Airflow, dbt, and Tableau

Amundsen is particularly well-suited for data-forward organizations that have the engineering resources to deploy and maintain an open-source solution. Its focus on user experience makes it accessible to both technical and business users, while its extensible architecture allows it to grow with your data ecosystem.

Data testing tools

What is data testing?

Data testing tools enable you to validate your data against defined expectations and quality standards. Much like software testing ensures code functions correctly, data testing ensures your data meets specific criteria before it’s used for analysis or decision-making.

Effective data testing tools allow you to define assertions about your data—like “this column should never contain nulls” or “these two tables should reconcile perfectly”—and continuously check if your data meets these expectations. They catch data quality issues early, before bad data propagates downstream.

Key capabilities include:

- Schema validation (data types, nullability, etc.)

- Data validation (range checks, pattern matching, etc.)

- Referential integrity testing

- Statistical validations

- Automated test case generation

Data testing isn't a reactive approach to data quality. It's about preventing issues from occurring in the first place. It's also not a substitute for good data governance or careful ETL design. Testing helps you catch issues, but you still need robust processes and well-designed pipelines to minimize the occurrence of those issues.

Best data testing tools

1. dbt Tests

For organizations using dbt (data build tool) for transformation, dbt's built-in testing functionality offers a streamlined approach to data testing that integrates directly with your transformation workflows.

Key strengths:

- Tight integration with transformation logic

- Simple YAML-based test definitions

- Both generic and custom test capabilities

- Test results viewable in dbt Cloud

- Natural fit for analytics engineering workflows

dbt Tests are particularly effective for organizations that have already adopted dbt for their transformation layer. The ability to define tests alongside models creates a seamless workflow that encourages testing as part of the development process rather than as a separate activity.

2. Great Expectations

Great Expectations has emerged as the go-to open-source framework for data validation and testing. It allows data teams to define "expectations" about their data and then validate data against those expectations.

Key strengths:

- Extensive library of built-in expectation types

- Detailed data quality reports with rich visualizations

- Integration with popular data orchestration tools

- Support for multiple execution environments

- Active community and commercial support options

Great Expectations excels in environments where data pipelines are being modernized and teams are adopting software engineering best practices for data work. Its Python-based approach makes it accessible to data scientists and engineers alike, while its flexibility allows it to be integrated into virtually any data stack.

Data cleansing and standardization tools

What is data cleansing and standardization?

Data cleansing and standardization tools help you transform messy, inconsistent data into clean, consistent, and usable information. These tools identify and correct errors, inconsistencies, and inaccuracies in your data—ensuring that downstream analytics and applications receive high-quality inputs.

Key capabilities include:

- Deduplication and record matching

- Format standardization

- Address verification and normalization

- Name parsing and standardization

- Data enrichment from reference datasets

Data cleansing isn't a substitute for good data collection practices. While cleaning tools can fix many issues, it's always better to capture high-quality data from the start.

Best data cleansing and standardization tools

1. Alteryx (formerly Trifacta)

Alteryx is a leader in the data wrangling space, offering a powerful yet intuitive platform for data cleansing and standardization. Its visual interface allows both technical and non-technical users to transform messy data into clean, structured formats.

Key strengths:

- Visual interface with intelligent recommendations

- Self-service data preparation capabilities

- Strong profiling and quality assessment features

- Support for complex transformations

- Integration with major cloud platforms

Alteryx particularly shines in organizations looking to democratize data preparation, allowing business analysts and data scientists to clean and standardize data without heavy reliance on engineering resources.

2. OpenRefine

For teams looking for an open-source solution, OpenRefine (formerly Google Refine) offers powerful data cleaning capabilities in a free, desktop-based application. Despite its simplicity, OpenRefine packs sophisticated features for tackling common data quality issues.

Key strengths:

- Open-source and free to use

- Powerful clustering algorithms for entity resolution

- GREL expression language for complex transformations

- Reconciliation with external data sources

- Non-destructive operations with full edit history

OpenRefine is particularly well-suited for smaller teams or individual data practitioners who need to clean moderate-sized datasets without investing in enterprise software. Its clustering capabilities are especially valuable for standardizing messy categorical data.

Data observability tools

What is data observability?

Data observability tools provide continuous, automated monitoring of your data pipelines and assets to detect issues, anomalies, and drift as they occur. Much like DevOps observability monitors application health, data observability keeps tabs on the health of your data ecosystem.

These tools integrate seamlessly with the modern data stack, enhancing data quality management by providing features like monitoring, observability, and governance tailored to the complexity and requirements of an organization's data infrastructure.

Effective data observability tools go beyond basic monitoring to provide context, root cause analysis, and actionable insights when problems occur. They help data teams shift from reactive firefighting to proactive management of data quality.

Key capabilities include:

- Automated anomaly detection

- Freshness and volume monitoring

- Schema change detection

- Lineage-aware impact analysis

- Intelligent alerting with minimal noise

Data observability isn't just setting up dashboards and thresholds. It's not about overwhelming teams with alerts or creating more noise—it's about providing meaningful signals that help maintain data reliability.

Best data observability tools

1. Metaplane

You're on the Metaplane blog right now, so I appreciate any skepticism, but if you're looking for a tool that will monitor your entire data stack for quality issues automatically, then Metaplane is for you.

Metaplane does a lot of the work that other data quality tools do—all rolled into one automated platform. By monitoring your entire data pipeline from end to end, you can prevent, detect, and resolve data quality issues before they effect stakeholders.

Key benefits of Metaplane include

- ML-powered anomaly detection that reduces noise

- Data lineage and impact analysis

- Data CI/CD

- Schema change alerts

- Integration with popular warehouses, BI tools, and notification systems

- Fast setup time

Metaplane does a lot of the work that other data quality platforms do, all rolled into one automated platform. By monitoring your pipeline from end to end, you'll always catch data quality issues before they impact stakeholders. Then, with the visibility that our data lineage and impact analysis provides, you'll be able to resolve issues quickly, too.

.png)

2. Elementary Data

For teams looking for an open-source alternative, Elementary Data offers a compelling data observability solution built on dbt and other open-source technologies.

Key strengths:

- Open-source flexibility

- Native integration with dbt

- Familiar SQL-based monitoring definitions

- Automated reporting of test results

- Simple deployment in your existing environment

Elementary Data is well-suited for organizations that have already invested in dbt and want to extend their testing capabilities with observability features—though it's limited if you want to extend observability to the rest of your pipeline. Its open-source approach allows for customization and extension to meet specific requirements, while its familiar SQL-based configuration makes it accessible to analytics engineers.

Data governance tools

What is data governance?

Data governance tools help you implement and enforce policies, standards, and controls for managing an organization's data quality across your organization. They provide the framework for ensuring data is secure, compliant, and used appropriately throughout its lifecycle.

Key capabilities include:

- Policy definition and enforcement

- Data classification and security controls

- Data access management

- Privacy compliance tooling

- Data quality rule management

Data governance isn't just about implementing restrictive controls. It's not about creating bureaucracy or making data harder to access—it's about enabling safe, compliant, and effective use of data assets.

Best data governance tools

1. Collibra

Collibra has established itself as a leader in the data governance space, offering a comprehensive platform that covers the full spectrum of governance capabilities. What sets Collibra apart is its business-friendly approach that balances governance requirements with usability.

Key strengths:

- Comprehensive data catalog with strong governance features

- Powerful workflow capabilities for policy management

- Robust privacy and compliance tooling

- Business glossary with term mapping

- Strong lineage visualization

Collibra particularly shines in regulated industries where governance requirements are complex and the stakes for compliance are high. Its workflow capabilities help formalize governance processes, while its business-friendly interface encourages adoption beyond the data team.

2. Apache Atlas

For organizations looking for an open-source solution, Apache Atlas offers a robust framework for data governance and metadata management. Originally developed as part of the Hadoop ecosystem, Atlas has evolved to support a wide range of data sources and use cases.

Key strengths:

- Open-source flexibility

- Strong classification and lineage capabilities

- Integration with security tools like Apache Ranger

- Extensible type system

- Active community development

Apache Atlas is well-suited for organizations with significant technical resources that want to build a customized governance solution. Its extensible architecture allows it to adapt to complex requirements, while its Hadoop heritage makes it particularly strong for big data environments.

Data remediation and enrichment tools

What is data remediation and enrichment?

Data remediation and enrichment tools help you fix identified data quality issues and enhance your data with additional context and information. While remediation focuses on correcting errors and inconsistencies, enrichment adds value by incorporating external data sources or derived attributes.

Key capabilities include:

- Automated error correction

- Record linking and entity resolution

- Third-party data integration

- Geocoding and spatial enrichment

- Derived attribute calculation

Data remediation isn't just about applying quick fixes. It's not about masking underlying issues in your data collection or management processes—it's about systematically addressing root causes while providing clean data for immediate needs.

Best data remediation and enrichment tools

1. Tamr

Tamr has pioneered the use of machine learning for data mastering and remediation, offering a powerful platform that can tackle even the most complex data integration challenges. What sets Tamr apart is its ability to combine automation with human expertise to achieve high-quality results at scale.

Key strengths:

- ML-powered entity resolution and record matching

- Human-in-the-loop feedback mechanisms

- Scalable architecture for handling massive datasets

- Support for complex hierarchical relationships

- Cloud-native deployment options

Tamr particularly excels in scenarios involving large-scale entity resolution across multiple systems—like customer or product mastering in large enterprises. Its machine learning approach allows it to handle degrees of complexity and scale that would be impractical with rules-based systems.

2. DataCleaner

For teams looking for an open-source solution with strong remediation and enrichment capabilities, DataCleaner offers a comprehensive set of data quality tools that can handle everything from basic profiling to complex transformations.

Key strengths:

- Open-source accessibility with community support

- Powerful data profiling and visualization capabilities

- Strong pattern matching and standardization features

- Support for both batch and real-time processing

- Extensible architecture with plugin capabilities

DataCleaner particularly shines for teams that need flexible data remediation workflows without the enterprise price tag. Its visual interface makes it accessible to analysts with limited coding experience, while its extensibility allows engineers to create custom transformations when needed.

Data quality tooling FAQ

Wondering how to pick the right data quality tool stack? Here are a handful of questions to ask as you begin the process?

How much should we expect to budget for data quality tools?

This is like asking how much a house costs—it depends on what you need! Entry-level open source tools can be free (though they'll require engineering time), while enterprise-grade solutions can run $50K+ annually.

Most mid-sized data teams find success starting with focused tools addressing their biggest pain points (often data observability or testing) for $15-30K annually, then expanding as they demonstrate ROI.

You can try Metaplane for free! Paid plans start at $10 per monitored table, per month. Get more details here.

Should we build or buy our data quality solution?

Here's the honest truth: unless data quality tooling IS your product, building robust solutions in-house almost always costs more than you think.

The initial MVP might seem straightforward, but maintaining, scaling, and evolving homegrown tools quickly eats engineering resources. Most successful teams use a hybrid approach—commercial tools for core capabilities, complemented by custom components for unique requirements.

Dive deeper into buying versus building a data observability tool.

Do we need a dedicated data quality person/team?

Not initially. The most successful approach I've seen is embedding quality ownership within existing data roles rather than creating a separate quality silo. Your analytics engineers, data engineers, and analysts should all own quality for their domains.

As you scale, having a "data quality champion" who coordinates tools and best practices across teams becomes valuable—but this is often a part-time role for someone already on the data team.

How do we convince leadership to invest in data quality tools?

Skip the technical arguments and focus on business impact. Document specific instances where bad data led to:

- Revenue impact (wrong sales forecasts)

- Wasted resources (marketing to duplicate customers)

- Missed opportunities (slow data discovery)

- Brand damage (incorrect customer communications)

Quantify the cost of these incidents, then show how specific tools would have prevented them. Nothing opens wallets faster than connecting quality to dollars.

How do data quality tools differ from data governance platforms?

While there's some overlap, think of governance as the broader organizational framework (policies, ownership, standards) while quality tools are the technical implementations that enforce and monitor those standards.

Governance platforms typically include basic quality capabilities, but dedicated quality tools offer deeper functionality for specific use cases. Many teams implement both, with governance defining the rules and quality tools enforcing them.

When should we implement data quality tools?

Earlier than you think. You might think you need to wait until you're "further along," but in reality, data quality tools provide the most value when implemented alongside your core infrastructure, not after it.

Even teams with basic data warehousing benefit from simple testing and observability. As your stack matures, your quality tools can evolve accordingly. The key is starting with focused solutions for your highest-value data rather than boiling the ocean.

Can't our existing ETL/ELT tool handle data quality checks?

While platforms like Airflow or dbt offer basic quality capabilities, they weren't built with improving data quality as their primary focus. They're great for simple validation checks but typically lack the depth needed for robust quality management. Most data teams complement their ETL/ELT tools with dedicated data quality tool.

Choosing the right data quality tool

As we’ve seen, the landscape of data quality tools is diverse, with specialized solutions addressing different aspects of the data quality challenge. When selecting the right data quality tool for your organization, consider these key factors:

- Current pain points: Focus first on tools that address your most pressing data quality challenges

- Existing tech stack: Look for tools that integrate well with your current data infrastructure

- Team capabilities: Consider whether you have the skills to implement and maintain complex solutions

- Scalability needs: Ensure the data quality tools can grow with your data volumes and complexity

- Budget constraints: Picking the data quality tool with the most impact per dollar.

Remember that data quality is a journey, not a destination. The right combination of tools, processes, and people will help you progressively improve your data quality over time.

By investing in the right data quality tools today, you’re not just solving immediate problems—you’re building the foundation for a data-driven organization where decisions are made with confidence, based on data that can be trusted.

Try Metaplane for free

Ready to give Metaplane a try? Sign up for free, or talk to our team if you have more questions. We'd love to hear more about what issues you're working on, and how we can help you improve data quality and data reliability across your organization.

Table of contents

Tags

...

...