Two ways to set up alerts in dbt

The sooner you can resolve job failures in dbt, the better. In this article, we'll walk you step-by-step through two different ways to set up alerts in dbt, and tell you why we prefer one method to the other.

dbt failures can be a metaphorical “canary in the coal mine.” When failures occur, you can be pretty sure bigger issues are coming, so the sooner you can address your dbt failure, the less likely stakeholders are to encounter stale, missing, or inaccurate data.

It’s important, then, to find out about dbt failures as soon as possible. To do so, you’ll want to have dbt alerts set up to notify you of each failed job.

Doing it isn’t hard, and in this post, we’ll walk you through how to do it in a couple of different ways:

- Natively using dbt Cloud notifications

- Using Metaplane’s free dbt alerting tool for more context-rich alerts

If you’re a dbt Core user, skip down to the Metaplane dbt alerting section.

How to set up alerts in dbt Cloud

If you’re a dbt Cloud developer user or admin user, you can use the dbt Cloud platform to configure job-level or model-level notifications. However, as of December 9th, 2024, model-level notifications are only email-supported.

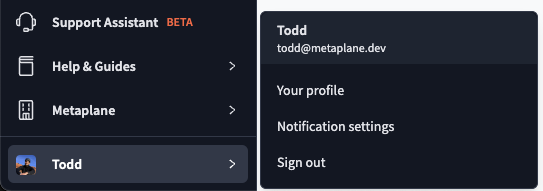

To get started, log in to dbt Cloud and click on your username in the bottom left-hand corner. Then, navigate to Notification settings.

Job-level notifications

Job-level notifications can be configured for both email and Slack. Let’s start with email.

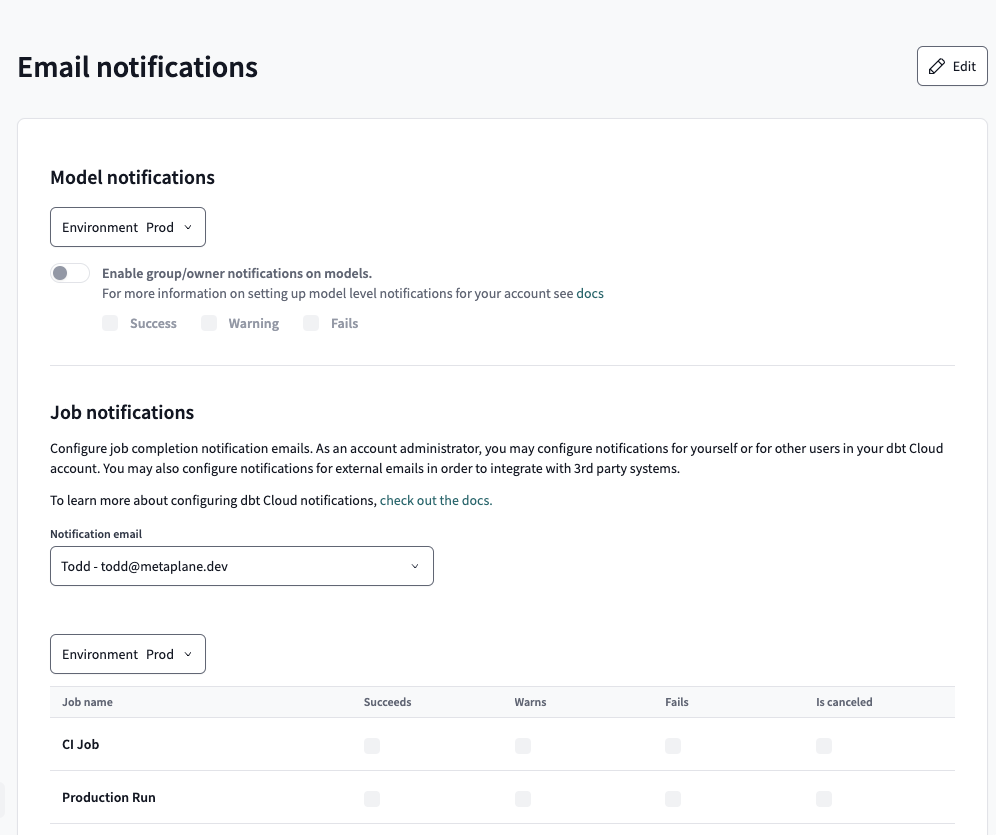

Update the Notification email value to select the email you’d like to configure alerts for. To send notifications to an email outside your dbt Cloud organization, choose Add external email.

Once you’ve selected an email address, click the Edit button in the upper right-hand corner. Clicking this button will allow you to edit the checkboxes within the job table.

Now, you can update the job-level events that you want to be notified of.

Slack

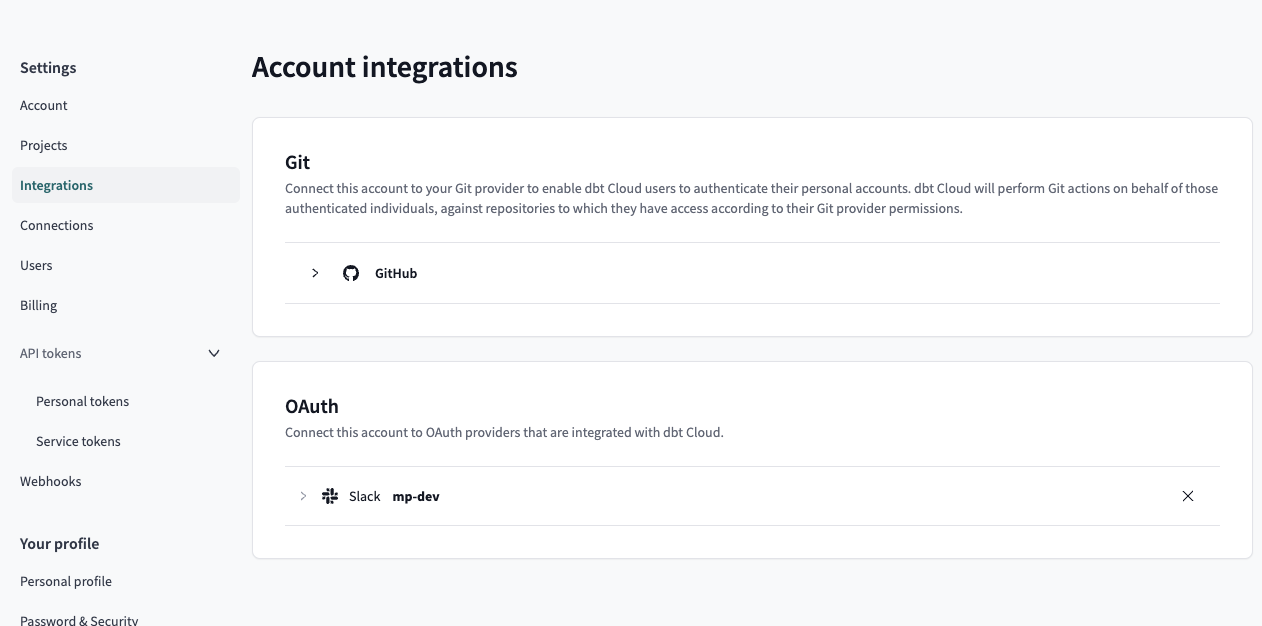

Slack configuration is very similar to email configuration except that you’ll first need to OAuth with Slack.

Navigate to the Integrations settings. Then, in the OAuth section, link Slack with dbt.

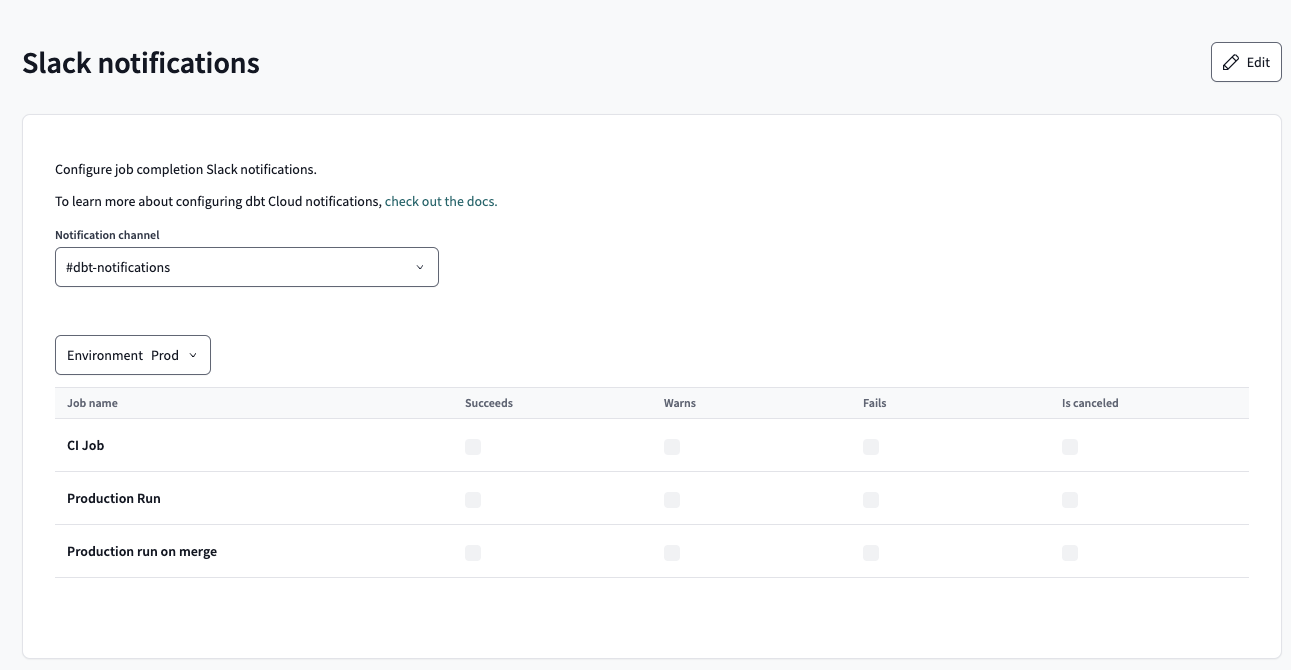

Once you’ve linked Slack, navigate to the Slack notification settings

Select a Slack channel then select Edit in the top-right corner to subscribe the selected channel to specific job-level events. Your end result should look like the image below.

Model-level notifications

As of December 9th, 2024, dbt Cloud’s model-level notifications have three limitations:

- They can only be sent to email, not Slack

- A model-level event (success, warning, failure) can only be sent to one email: the model’s owner. This means if you want a specific model event to go to a group of people, you’ll need to create an email group and use that email as the model’s owner.

- You can’t route model-level events based on the event that occurred. For example, you can’t send failures to one email while sending warnings to another.

dbt Cloud’s model-level notifications functionality heavily depends on their concept of groups and owners. You can read more about groups and owners here. To boil it down:

- An owner can be defined with arbitrary properties, but name and email are required.

- A group has a name and an owner.

- A model can be associated with a group.

Essentially, once your DAG has been appropriately decorated with group/owners, you can enable group/owners notifications.

To enable group/owner notifications, click the Edit button in the upper right-hand corner of the Email notifications page, then toggle Enable group/owner notifications on models and select the events of interest.

Now, subsequent runs should route alerts based on your group/owner configurations.

How to set up dbt alerts using Metaplane

Now that we’ve talked through how to set up alerts natively in dbt Cloud, let’s walk through a new method: using Metaplane’s free dbt alerting tool.

We built our own dbt alerting tool because we found native dbt Cloud notifications lacking in two key areas: context and routing.

Not every dbt failure carries the same weight. Sometimes, the problem isn’t too urgent, and you can finish making your cup of coffee before opening your laptop to resolve it. But other times, it’s a put the cup of coffee down and sprint to your laptop kind of issue. It helps to know the difference immediately.

Metaplane’s dbt alerts help you assess the situation by providing more context right in the alert itself. And with more granular routing, you can make sure each alert finds the right person or team to do something about it.

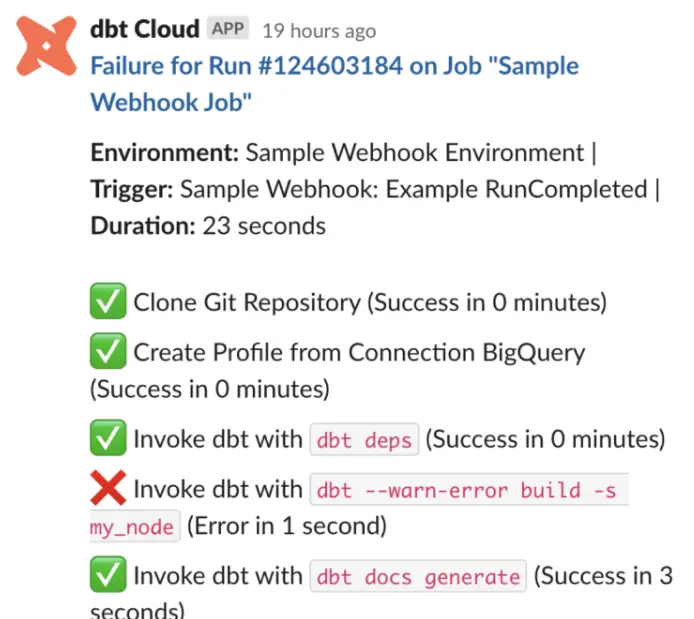

.jpeg)

Follow the instructions below to set up dbt alerts with Metaplane's free alerting tool.

Setup your Metaplane account

First, create a free account if you don’t have one already. Once you create an account you’ll be prompted to select your flavor of dbt: Core or Cloud.

Connect Metaplane and dbt

To properly dispatch dbt alerts, Metaplane needs to know about your jobs and how they went. This step differs slightly depending on if you’re a dbt Cloud or Core user.

dbt Cloud

If you’re a dbt Cloud user you’ll need to provision an API token that Metaplane can use to access metadata related to your DAG.

Once you connect Metaplane to your dbt Cloud instance, it’ll take a couple of minutes for the first sync to complete.

dbt Core

If you’re a dbt Core user, you’ll need to send us metadata related to your dbt runs.

To get started, you can send us artifacts from a dbt run that you performed locally. However, you’ll want to fold Metaplane into your CI pipeline so that important job runs are automatically reported to Metaplane. We’ll touch on that later.

The project-name and job-name arguments are used for naming/organizing within the Metaplane UI. You can also decide on different names later.

Once you’ve sent Metaplane the artifacts, your screen will automatically refresh.

Configuring alert rule node matching

At this point, Metaplane has successfully connected to your dbt metadata. Now, it’s time to configure alert rules.

Metaplane’s interface will walk you through creating your first rule.

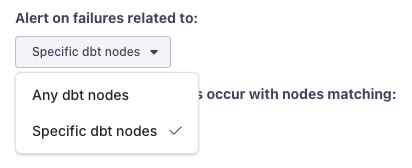

As mentioned earlier, one of the main differentiators with Metaplane’s dbt alerting functionality is the granularity at which you can configure rules. For that reason, let’s choose to create a rule based on specific dbt nodes.

Now, you’ll be able to specify which node failures should trigger an alert.

Tags

With tag-matching, you choose to get alerts of failures that occur on nodes with specific tags.

Location

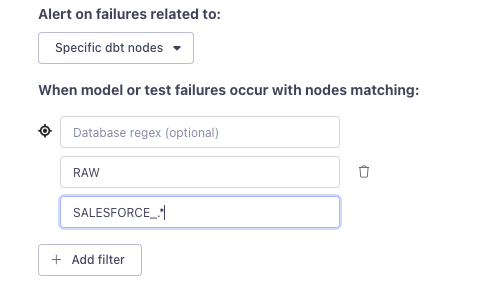

With location matching, you can match nodes based on their location within your warehouse.

Note that each segment of the location can be expressed using a regular expression. In the example above, we’re matching model failures in the `RAW` schema that start with a `SALESFORCE_` prefix.

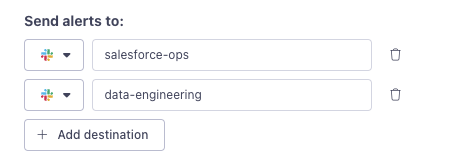

Configuring alert destinations

Now that you’ve defined the nodes of interest for your first rule, let’s configure where the related alerts should go.

If you choose to send alerts to Slack, we’ll prompt you later to install our Slack app. If you’re using Microsoft Teams, check out our docs on how to generate a webhook URL.

Select Next when you’re done building for your first rule.

Update your dbt project

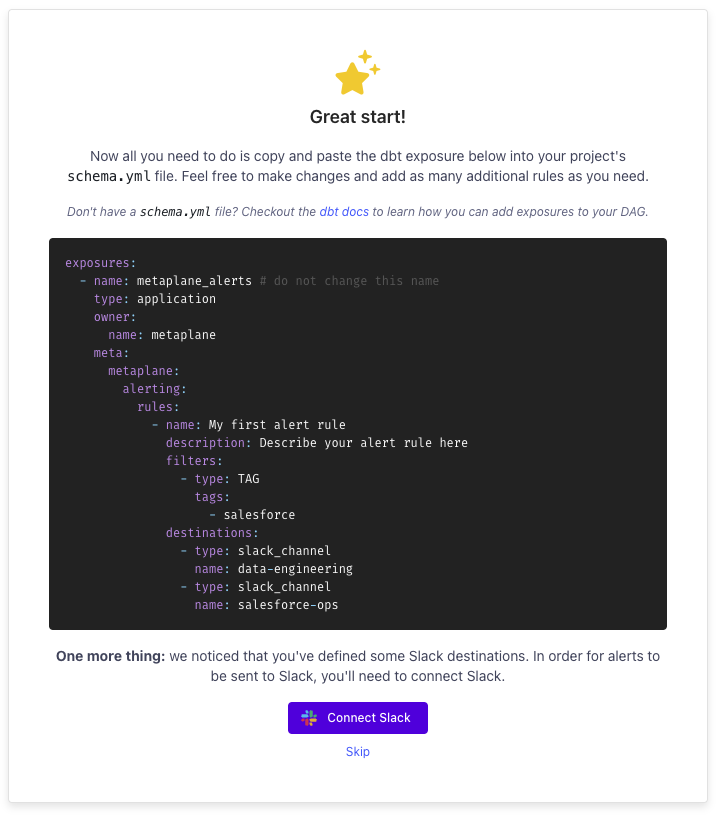

Now it’s time to add the alert-rules-as-code to your dbt project. Feel free to add additional rules. Each time you’re notified of dbt runs, you’ll use these rules to dispatch alerts.

If you choose to receive your alerts in Slack, make sure to connect Slack by selecting the Connect Slack button.

dbt Cloud

If you’re using dbt Cloud, you’ll need it to start executing runs with the new Metaplane exposure.

Leveraging your CI job is the best way to iterate on the alert rules and test that they’re working. If your CI job is trigger-based on source control branches, push a branch with the new exposure as well as an intentional model or test error.

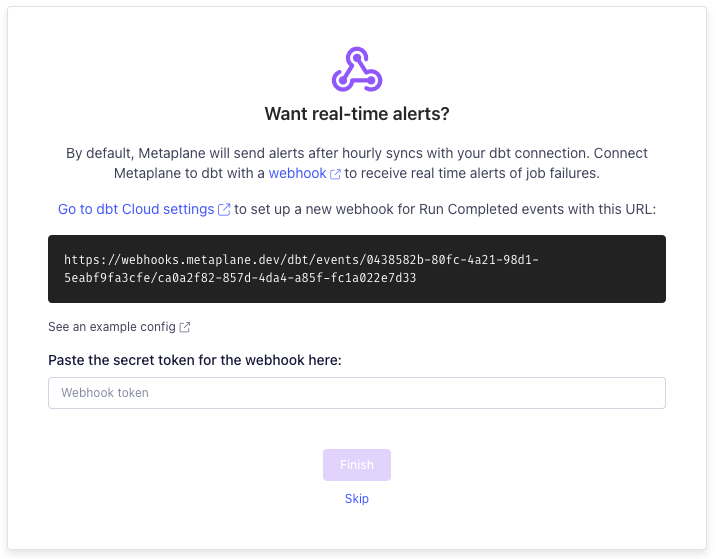

You'll need to configure a webhook so that Metaplane can be notified about failures as soon as they occur. To do so, go to dbt Cloud settings, set up a new webhook for Run Completed events with the URL Metaplane provides, and paste the secret token back into Metaplane.

If you don’t want a CI job, the feedback loop will be a bit longer—syncing hourly rather than in real-time.

dbt Core

If you’re using dbt Core, once you’ve updated your project by adding the exposure above, you can test your rules by causing local failures and then sending the results to Metaplane using the same `curl` + `python` command from earlier. Feel free to do this as many times as needed to fine-tune your rules.

You should receive alerts within moments of reporting a failed run. When you’re done, push your changes to source control.

The last step is to instrument your CI pipeline so that dbt runs are reported to Metaplane automatically. Our dbt Core docs outline how to do that using either the Metaplane CLI or the same Python script you’ve been using locally.

Resolve dbt failures faster with dbt alerts

dbt job are often the first signal of what can become a much larger downstream issue, so the sooner you can do something about them, the better.

Native dbt Cloud alerts can help with that, but for alerts with richer context that let you know if a failure is a drop-everything-and-fix-it-ASAP kind of failure, we’d recommend using Metaplane dbt alerts.

If you’re new to Metaplane, we’re an end-to-end data observability platform that helps data teams increase quality and trust in their data. If you want to learn more, sign up for a free account, or talk to our team!

Table of contents

Tags

...

...